Proxies* are the frontend of the backend. Whether it is an API gateway, a layer 7 load balancer, or a CDN, the proxy server receives the lion share of connections from clients. This makes it challenging to accept large amount of connections as fast as possible, and also process* logical requests on those connections in a balanced manner.

I discussed the common architecture patterns for managing connections with multi-threaded proxies and backend applications. However, as soon as I posted that article I was reminded that Envoy has an interesting architecture style when it comes to managing connections. Let us do a recap of how Envoy manages connections by default and how the listener connection balancing works. We are assuming TCP in all discussions in this article.

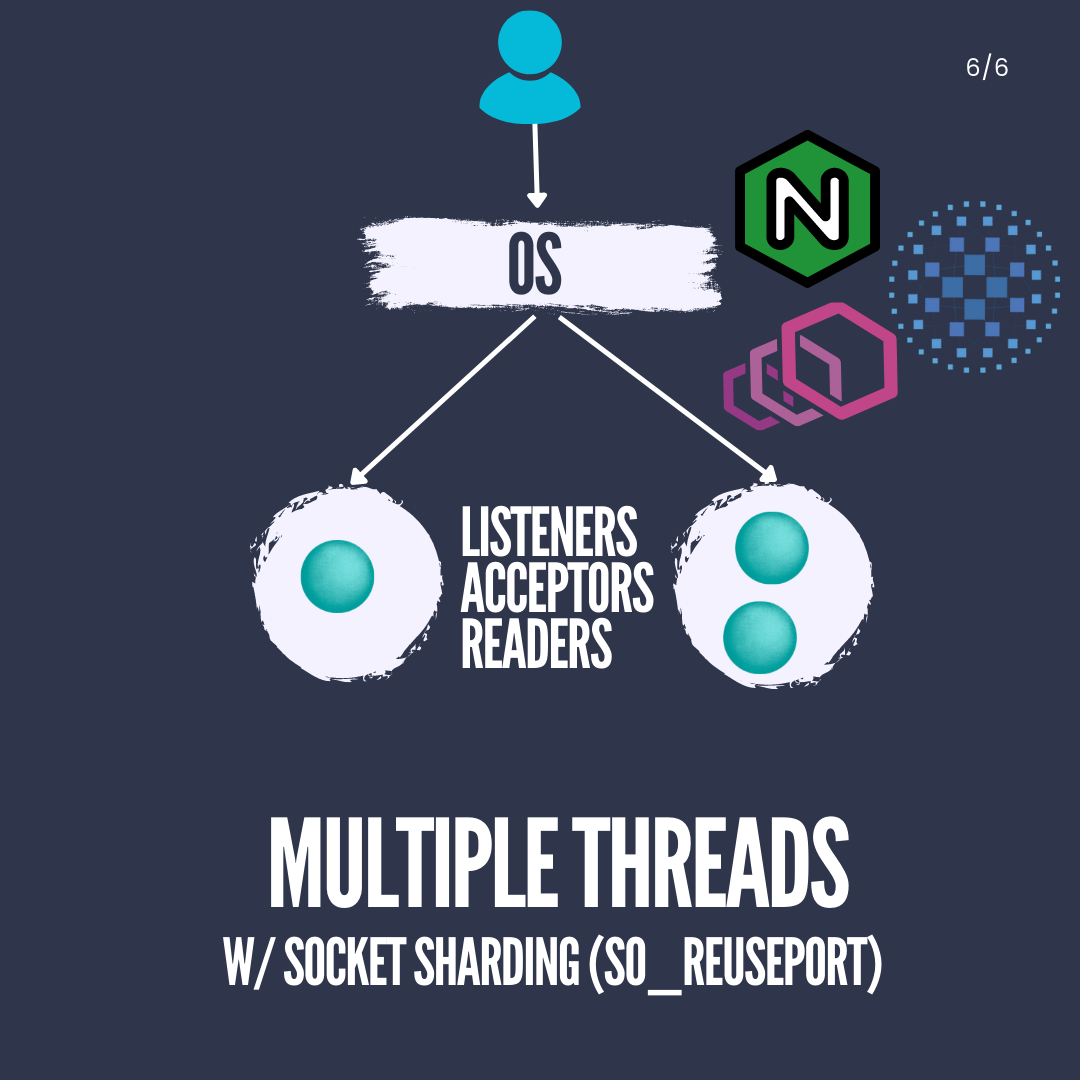

Socket Sharding — SO_REUSEPORT

Just like HAProxy and NGINX, socket sharding is the default listener configuration in Envoy. This means all Envoy’s listener threads will listen on the same port configured by the user. Normally this results in a listener error as no two processes (threads) can listen on the same port. However, if you specify the socket option (SO_REUSEPORT) on the listener, each thread will be allowed to listen on the same port creating its own socket.

This effectively creates a queue for each socket bound to the same port/address. As the operating system does the final ACK in the TCP three way handshake it will move the ready connection to an accept queue where the thread owning the socket can call accept() to create the file descriptor for the connection and start reading and writing to the connection. The way the OS decides which queue to move the connection to is based on a hash of the four tuples (source ip/source port/destination ip/destination port). We know the destination ip and port are the same, what changes here is the source side. With large number connections, those will be distributed across all sockets and if each thread owns a socket, threads will be assigned a a certain amount of connections each.

Problem with Socket Sharding

Are the connections actually well balanced between all sockets and threads? Not really, the hashing algorithm is far from perfect, chances that one thread will get way more connections than other threads are very high. This doesn’t really matter when you have tons of short-lived connections, (e.g. HTTP/1.1 connections from the browsers). However, if your clients use long-lived connections with tons of requests on each it can become a problem. This is especially true in gRPC, h2 or connections initiated from service mesh (proxies talking to proxies), where once you establish the TCP connection, the client will send all sorts of requests through the connection. So whatever poor thread end up hosting the gRPC connection will be busy executing tons of multiplexed requests from the client.

Any amount of unbalancing here will significantly affect performance. if you have a 50 gRPC connections and 4 threads, the socket sharding (SO_REUSEPORT) hashing algorithm might assign 35 connections to thread 1, 5 connections to thread 2, 9 connections thread 3 and 1 connection on thread 1. Thread 1 becomes a hotspot while other threads are not as busy.

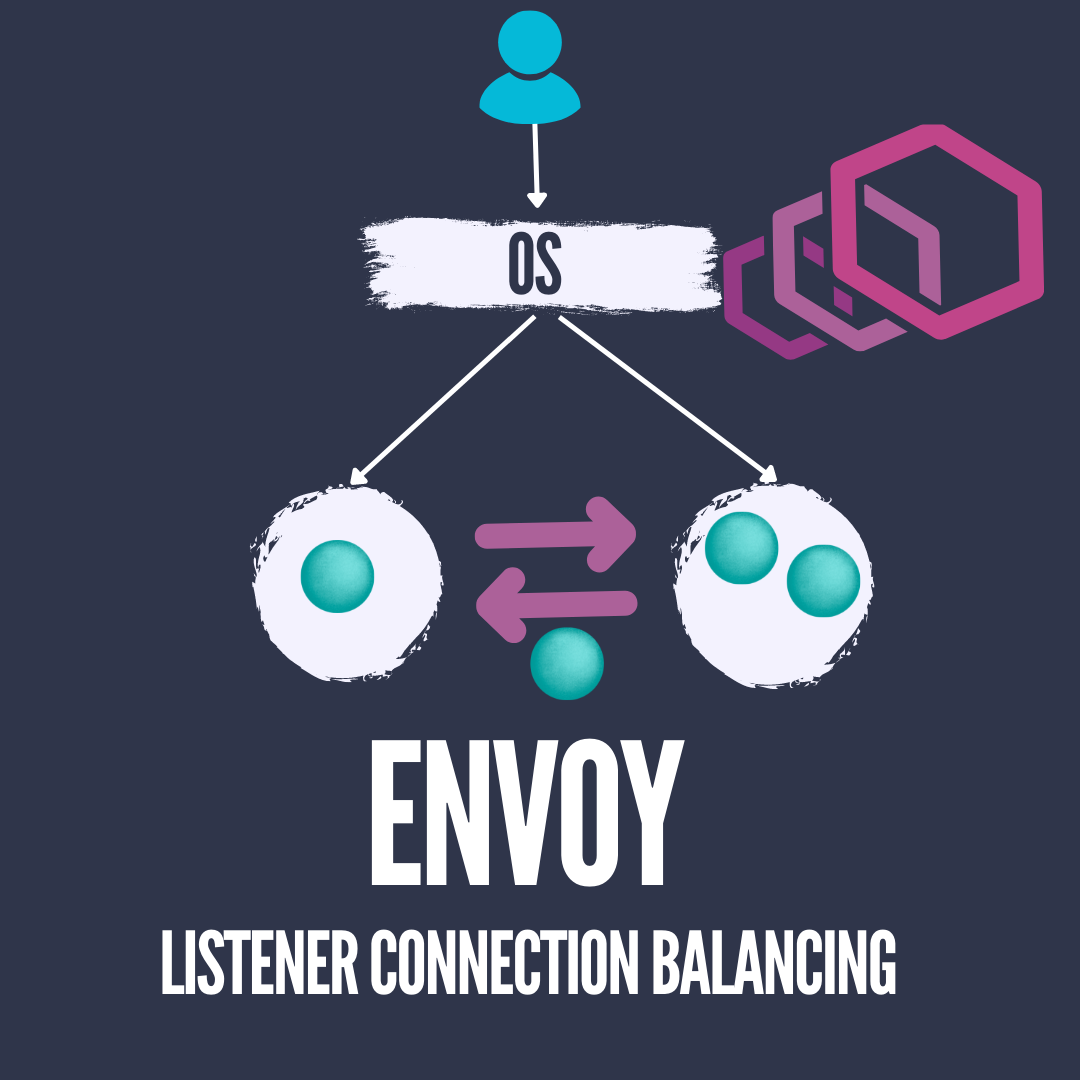

How did Envoy solve this?

Envoy realized the problem, clients that happened to land on the busy thread 1 will suffer from performance degradation, not to mention the upstream backend connection pool in thread 1 will be exhausted. So Envoy invented the Listener Connection balancing where threads will start talking to each other (something that they didn’t use to do) and exchange sockets to balance out the load if one thread has more sockets. I don’t have enough technical details on how exactly this is done as the doc doesn’t mention much details.

In my opinion while I find this architecture interesting, I have to say it's not as effective, here is why. Even if you have 4 threads and each is assigned an equal number of gRPC connections, you still lack the knowledge of load. Thread 1 might be served by a greedy client sending tons of logical requests that require high processing while thread 2–4 host less demanding clients. This is where a message-based load balancing can be useful, the listener threads don’t do the processing but parse application requests instead and then forward the requests to worker threads that do the work. The listener threads know how much work each worker thread is doing and can result in a true load balancing environment.

Learn the fundamentals of network engineering to build effective backend applications, get my udemy course head to

https://network.husseinnasser.com

for a discount coupon and support.

*Proxies here refers to both proxies and reverse proxies.

*Proxies request processing here refers to parsing the TCP connection stream looking for L7 application request. It also includes writing the request to the backend upstream connection.